Marc Zuckerberg rock the internet this week, when Meta’s CEO announced that the tech giant would no longer conduct independent fact-checking. The program, implemented in 2016, will be replaced by Meta’s own version of Community Notes, a participatory approach to reviewing online content used by X, the social media platform formerly known as Twitter.

So, what exactly are Community Notes and how does it work?

How Community Notes work

Meta has not released details on how it will allow users of Facebook, Instagram and other social platforms to monitor content, said Melissa Mahtani, executive producer of CBS News Confirmed.

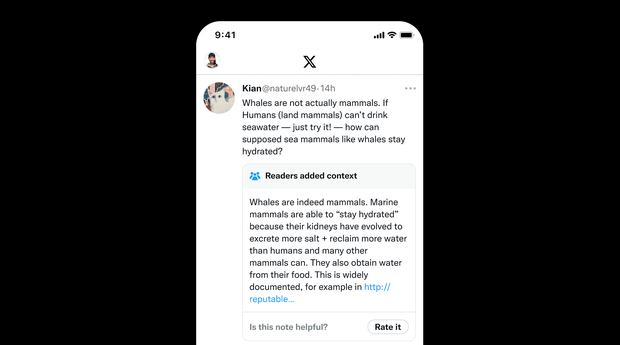

On X, Community Notes works by leaving fact-checking up to the community. Approved contributors report content deemed false or misleading by attaching notes providing further context. Here is an example of a community note provided by X on its site:

X

Becoming an approved contributor on X “doesn’t take much,” Mahtani said. Any X user with an active phone number and who has been on the platform for at least six months without violations is qualified to volunteer to contribute, she said. Contributors are protected by anonymity.

When a post is found to be false or misleading by an approved contributor, the person will post a note providing users with additional context. The note appears below the original message.

Once a note is added by an approved contributor, it is still not visible to regular users on X. Before this happens, other approved contributors must vote on whether the note is useful. This is where “things get complicated,” Mahtani said.

Is the note useful?

Once a rating is added to a post on X, other approved contributors rate it based on its usefulness.

Mahtani explained: “Other contributors should review the origin, accuracy of this note and vote on whether it is useful or not. If they vote for it to be helpful — that’s the tricky part — the company says an algorithm looks at the ideological spectrum of all the contributors who voted. If they believe those voters are diverse, that is published. »

The algorithm decides

According to the X website, the purpose of the so-called bridging-based algorithm is to “identify notes that are useful to a broad audience, regardless of point of view.”

In other words, if the algorithm sees that the contributors who voted for a given note represent an ideologically diverse group, then the note becomes visible on the platform. But if the algorithm finds that voting contributors have too uniform political views — a possible sign of bias — “the public never sees it,” Mahtani said.

Problems with seen by readers. How quickly a rating is made public is also important, so that false or misleading information cannot spread unchallenged.

A report in October by the Center for Countering Digital Hate, a nonprofit organization, analyzed the Community Notes feature and found that accurate notes correcting false and misleading claims about the U.S. election were not displayed on 209 posts in a sample of 283 deemed misleading, or 74%.

More information on how X’s Community Notes work can be found at X’s Community Notes. page.

CBS News has a dedicated editorial team, CBS News Confirmedthat fact-checks, exposes misinformation, and provides critical context. You can follow CBS News Confirmed at Instagram And TikTok.